How to Identify GPU Slot Error in Server with Ubuntu Command

Finding a GPU slot error can be very difficult, mainly in the case of high-performance environments like AI model training, GPU hosting, or server-powered deep learning. If your server is running Ubuntu, then there are a lot of command-line tools and practical steps that help you identify whether a slot-based error is impacting your system performance.

In this knowledge base, we will take you through finding GPU slot issues with the help of practical Ubuntu commands. This guide is generally beneficial for administrators handling a GPU server, AI GPU clusters, or a GPU dedicated server like those powered by GPU4HOST.

Why GPU Slot Errors Are Necessary in a GPU Server

A GPU slot error generally shows a failure in communication between the graphics card and the server’s motherboard. In various mission-powered applications such as:

- AI image generator platforms

- Nvidia A100-powered GPU server

- GPU cluster,s especially for deep learning

… This type of error can result in unexpected downtime, faulty model training, or reduced performance.

Common Symptoms of a GPU Slot Error

Before deeply diving into all commands, it’s necessary to be familiar with the signs of a GPU-based slot error:

- The system has not detected the GPU.

- Errors in dmesg are associated with PCIe or GPU.

- Unsteady performance at the time of AI-based tasks.

- GPU overheating just because of improper contact.

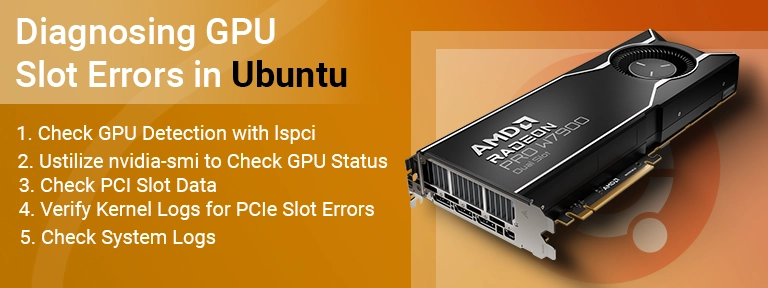

Step-by-Step: Diagnosing GPU Slot Errors in Ubuntu

1. Check GPU Detection with lspci

lspci | grep -i nvidia

The above-mentioned command lists every PCIe device processed for NVIDIA GPUs. If a GPU is not registered, it might show a GPU slot error.

Bonus Tip: In the case of a healthy GPU server, every single installed GPU (such as NVIDIA A100) will be mentioned here.

2. Ustilize nvidia-smi to Check GPU Status

nvidia-smi

If nvidia-smi unexpectedly fails to load or returns an empty list of GPUs, then your GPU dedicated server may be facing a GPU-powered slot error or driver-based issue.

Result you want to see:

- Temperature

- GPU name

- Utilization stats

- Process usage

But if you get “No devices were found,” then remember it’s a red flag.

3. Check PCI Slot Data

sudo lshw -C display

This command shows hardware information related to GPUs connected to the server.

Opt for:

- logical name: missing

- status: UNCLAIMED

- Resources: unavailable

Any of these could show a GPU slot error.

4. Verify Kernel Logs for PCIe Slot Errors

dmesg | grep -i pci

This showcases PCI-based errors. A GPU slot error may be seen as:

- “unable to enable memory access”

- “Link training failed.”

- “device not found.”

These messages help validate if your GPU hosting server is experiencing a hardware-level problem.

5. Check System Logs

sudo journalctl -k | grep -i nvidia

The above-mentioned command gives you kernel logs dedicated to NVIDIA GPU activity. It can help spot if the GPU was connected and then instantly went offline — another crucial sign of a slot error.

What to Do if You Get a GPU Slot Error

Once you have checked that a GPU slot error occurs with the help of the commands above, follow the steps below:

Reseat the GPU

Physically pull out the GPU and then again insert it into the PCIe slot. Thermal expansion, dust, or vibration can lead to relaxed connections.

Try Another Slot

If you are utilizing a GPU server with many PCIe slots, then shift the GPU to any other slot to check if the issue remains with the slot or the GPU itself.

Exchange GPUs

Insert any other NVIDIA GPU (such as a spare Nvidia A100) into the doubtful damaged slot. If another used GPU also fails, it’s most likely a GPU slot error and not the GPU hardware.

BIOS/UEFI Reset

Some of the GPU server may not correctly detect new hardware just because of outdated BIOS settings. Simply, reset the BIOS to the default or up-to-date to the latest version.

Utilize Professional Monitoring Tools

In the case of a GPU cluster or any special hosted environment, such as a GPU4HOST, enterprise-level monitoring tools can offer slot-level diagnostics.

Avoiding the Upcoming GPU Slot Errors

Maintaining cutting-edge GPU hosting environments needs constant monitoring and preventive care:

- Utilize server-level hardware: Workstations are not always engineered for 24/7 GPU stress, such as AI model training.

- Deploy AI-enhanced hosting: Several platforms, such as GPU4HOST, provide trustworthy infrastructure with an advanced cooling system and component stability.

- Check thermals & power: Excess heating or power rise can physically harm PCIe slots in different ways.

- Schedule constant audits: Check proper logs, utilization, and slot health weekly or once a month.

Final Thoughts

A GPU slot error can unbalance the performance and trustworthiness of your GPU dedicated server or a GPU cluster, mainly when utilized for AI-heavy workloads like AI image generation or deep learning model training. By utilizing Ubuntu’s built-in commands such as nvidia-smi, lspci, lshw, and dmesg, administrators can flawlessly identify and troubleshoot GPU detection errors.

If you are hosting your tasks on a well-known platform like GPU4HOST, you get a lot of additional benefits, including enterprise-level GPU diagnostics, thermal controls, and 24/7 expert support to help address hardware faults rapidly.

Always stay cautious. Don’t let a normal GPU slot error easily slow down your AI or GPU-based applications. Check regularly and host carefully.