NVIDIA Tesla A40 Hosting

The NVIDIA A40 unites both the performance and components important for extensive display experiences, AR/VR, and a lot more.

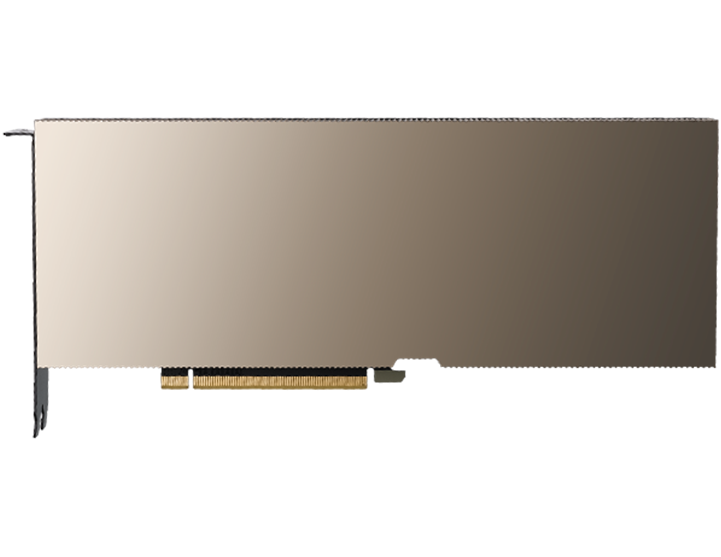

The NVIDIA A100 Tensor Core carries out unmatched acceleration to boost the world’s highly flexible data centers for data analytics, HPC, and AI applications.

Get Started

Hosted GPU dedicated servers with NVIDIA A100 GPUs carry out excellent performance over integrated graphics. Unmatched performance for AI model training, data inference, deep learning, and complex simulations, guaranteeing high scalability and productivity in enterprise-level hosting cases.

An A100 GPU can be easily divided into 7 different GPUs, completely isolated at the level of hardware with their individual computing cores, cache memory, and high-bandwidth memory. MIG gives all developers full access to boost acceleration for various applications, and IT administrators can provide appropriate GPU acceleration for every work.

Whether utilizing MIG to divide an A100 GPU into small portions or NVLink to link various GPUs to speed heavy workloads, A100 can easily manage various-sized acceleration requirements, from the little job to the large-scale heavy workload.The versatility of the NVIDIA A100 states that all IT managers can easily increase the utilization of GPU servers in their data centers.

AI networks have a huge amount of useful parameters. Not every single parameter is required for correct estimations, and some can be easily changed to zeros, making all the models “sparse” without negotiating with accuracy. While the feature of sparsity more easily advantages AI inference, it can also enhance the working of AI model training.

NVIDIA NVLink in the case of A100 offers two times more accurate output as compared to the former generation. When united with NVIDIA NVSwitch™, up to almost 16 A100 GPUs can be easily interlinked at up to 600 gigabytes every second (GB/sec), uniting the maximum application performance that is possible on a solo server.

NVIDIA A100 has 312 teraFLOPS (TFLOPS) of performance related to deep learning. That is almost 20 times the Tensor floating-point operations per second (FLOPS) especially for deep learning model training and also 20X the Tensor tera operations per second (TOPS) for deep learning inference as compared to Volta GPUs of NVIDIA.

With up to almost 80 gigabytes of HBM2e, the A100 GPUs have the most powerful GPU memory bandwidth of almost more than 2 TB per second and a dynamic random-access memory (DRAM) usage productivity of 95 percent. A100 offers 1.7 times more memory bandwidth as compared to the former generation.

The platform boosts over 2,000 apps, containing every single deep learning agenda. A100 is almost available at every place,

from desktops to servers to cloud services, carrying out both optimal performance gains and money-saving options.

A100 have advanced-level features to enhance heavy workloads. It boosts a wide variety of precision, ranging from FP32 to INT4. Multi-Instance GPU (MIG) allows various networks to operate at the same time on a single A100 for reliable usage of computing resources. And structural sparsity always supports offering almost two times more reliable performance on top of A100’s some other inference performance advantages.

The A100 is developed to boost AI model utilization and inference tasks, allowing quicker and more productive processing of rigid neural networks. Its innovative capabilities support reliable and accurate estimations, making it one of the best choices for all demanding AI applications in the case of data centers and research landscapes.

NVIDIA A100 presents double-precision tensor cores to carry out the robust leap in HPC performance since GPUs are introduced. With 80GB of the quickest GPU memory, researchers can easily decrease up to 10-hour, double-precision complex simulation to under almost 4 hours on the A100 server. HPC applications can easily influence TF32 to attain up to 11x more accurate outcomes for single-precision, solid matrix-multiply processes.

Its Ampere architecture improves parallel processing and productivity, making it one of the best choices for handling scientific simulations, data analysis, and complex computations. The A100’s innovative features allow quicker implementation of demanding heavy workloads, determining progressions in engineering, scientific research, and data-accelerated applications.

AI training models are exploding in the case of complexity as they easily take on high-level challenges like conversational artificial intelligence. Training all of them needs intensive computing power and reliability. NVIDIA A100 Tensor Cores with Tensor Float (TF32) offers up to 20X reliable performance over the NVIDIA Volta with almost no changes in the code and double acceleration with automatic integrated precision and FP16.

Its innovative Ampere architecture boosts the training of extensive neural networks, fundamentally decreasing the time needed to process and enhance all complex models. With improved Tensor Cores and MIG capabilities, the A100 helps in productive and reliable training for cutting-edge AI applications and research.

Data scientists need to be easily able to examine, visualize, and turn all large datasets into useful insights. But expanded outcomes are frequently hung up by huge datasets dispersed across various servers. Boosted servers with A100 offer the required computing power—including extra memory, over almost 2 TB per sec of memory bandwidth, and reliability with NVIDIA® NVSwitchTM and NVLink® to manage all heavy workloads.

Its Ampere architecture boosts data processing and valuations, allowing quick insights from datasets. The progressive features of A100, containing tensor cores and high memory bandwidth, enable quicker querying and real-time data analytics, driving outcomes in various fields like healthcare, finance, engineering, and scientific research.

If you need to perform research, deep learning training, and many more, then you can have some better options.

The NVIDIA A40 unites both the performance and components important for extensive display experiences, AR/VR, and a lot more.

Offers robust performance along with 16 GB of HBM2 memory and 640 tensor cores, best for AI model training and many more.

The Tesla K40 offers robust computing power with 12 GB of GDDR5 memory and 2880 CUDA cores, ideal for complex computing.

Contact us either through phone calls or live chat and get the solution to your problem.