GPU Pod Kubernetes Guide: Know When It’s Scheduled & Working

Kubernetes has now become the gold standard for container orchestration. Even if you are training challenging machine learning models or quickly deploying AI-powered services such as an AI image generator, GPU acceleration is usually necessary. But how do you make sure that your GPU Pod Kubernetes is properly scheduled on a specific node along with GPU proficiencies? Misconfigurations or scheduling problems can quietly waste all your valuable resources. This whole guide takes you through everything that you want to check about GPU pod scheduling within Kubernetes environments.

This whole tutorial is generally relevant for all those who are using high-powered infrastructure like a GPU dedicated server, a GPU cluster, or cloud-powered GPU hosting from providers like GPU4HOST. We’ll also cover essential tips for checking and performance optimization with the help of all real-world tools.

Why Scheduling is Important in GPU Pod Kubernetes

Let’s be genuine—GPUs aren’t low-priced. Even if you are using an NVIDIA A40, NVIDIA V100, or any other premium type, it’s very important to confirm that all those assets are being used. Kubernetes can easily handle GPU resources, but it doesn’t always guarantee that your GPU Pod will be scheduled where it should be.

Failing to check can lead to:

- Underused GPU server.

- Longer time for AI-based model training or image rendering times.

- Higher cloud prices are just because of ineffective GPU hosting.

- Misleading standard on job advancement or completion.

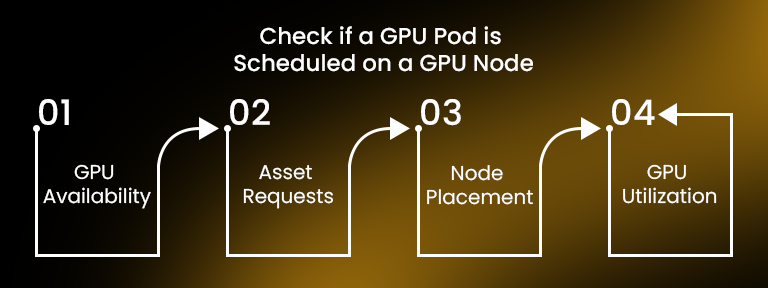

Step-by-Step Guide: Check if a GPU Pod is Scheduled on a GPU Node

The steps mentioned below are a practical guide that takes you through, with the help of kubectl and system examination, to guarantee that your GPU Pod Kubernetes is scheduled in the right way.

1. Ensure Nodes Have GPU Resources

First of all, make sure that your Kubernetes cluster has GPU-capable nodes.

kubectl describe nodes | grep -i nvidia.com/gpu

If the result displayed a value such as nvidia.com/gpu: 1, your node has at least one GPU available.

2. Check Pod Assets Requests for GPU

When describing your pod, ensure it clearly requests GPU assets:

resources:

limits:

nvidia.com/gpu: 1

You can also check this after deployment:

kubectl get pod <pod-name> -o jsonpath='{.spec.containers[*].resources}’

This verifies that the GPU Pod Kubernetes is requesting GPU resources as required.

3. Check Pod is Running on GPU Node

Once deployed, find out the node where the pod is running:

kubectl get pod <pod-name> -o wide

Then, check the node:

kubectl describe node <node-name>

Make sure that the node has the nvidia.com/gpu label or shareable resource.

4. Check GPU Utilization Inside the Pod

If your chosen pod is running properly on a GPU-enabled node, you should be able to check GPU utilization inside the container:

kubectl exec -it <pod-name> — nvidia-smi

The above-mentioned command leads to the status of GPU utilization, verifying that your GPU Pod Kubernetes is not only scheduled but actively utilizing the GPU.

Additional Bonus: Label GPU Nodes for Easier Targeting

You can effortlessly label GPU-enabled nodes for smooth scheduling:

kubectl label nodes <node-name> hardware-type=GPU

Then, utilize a node selector in your pod specification:

nodeSelector:

hardware-type: GPU

This practice, paired with node management in huge GPU clusters, guarantees more productive GPU hosting.

Monitoring GPU Pod Performance

Monitoring is an essential factor when simply working with GPU Pod Kubernetes tasks. Tools such as Grafana, Prometheus, or NVIDIA DCGM help track GPU metrics in real time. Always utilize these tools to check:

- Monitor the utilization of memory and compute.

- Find underutilization.

- Optimize tasks for improved performance on a GPU server.

Real-World Use Case: AI Image Generator in Kubernetes

Let’s just say you are deploying an AI image generator in a containerized environment. All these types of models generally need robust GPUs and instant scaling. Here is where a GPU Pod Kubernetes deployment stands out:

- Schedule all pods automatically on NVIDIA V100 or A40.

- Deploy instantly with the help of GPU4HOST, GPU hosting.

- Check in real-time to improve throughput.

With a correctly configured setup, you could easily decrease image generation time by almost 40 to 60% as compared to CPU nodes. That’s the potential of matching your app to the appropriate GPU server.

Why GPU4HOST is Perfect for GPU Pod Kubernetes Deployments

When you are working with GPU Pod Kubernetes tasks, having trustworthy infrastructure is non-negotiable. GPU4HOST offers:

- Cutting-edge GPU hosting.

- Quick access to robust GPUs such as NVIDIA A40 & NVIDIA V100.

- Instant deployment and 24/7 support for GPU dedicated server configurations.

Even if you are training models or deploying an AI image generator, GPU4HOST makes sure that your pods always have the power they wish for.

Final Thoughts

Scheduling a GPU pod in Kubernetes properly ensures you get the complete value of your GPU server. Even if you are utilizing a self-managed GPU server or cloud infrastructure, both of these steps can help.

- Make sure that the pod is on a GPU-enabled node.

- Check that the GPU is being used.

- Monitor and improve constantly.

This ensures high ROI on resources such as NVIDIA A40, GPU cluster, and exclusive GPU hosting from platforms such as GPU4HOST. High performance. Less waste. That’s the Kubernetes-GPU benefit.