A Guide to Decode nvidia-smi lspci Output for GPU Management

At the time of handling high-performance GPU servers for AI model development, deep learning, or GPU hosting, one of the essential tools every system admin should master is checking the nvidia-smi lspci Output. Even if you are optimizing an AI GPU cluster, deploying a GPU dedicated server, or working with the robust NVIDIA A100, these commands provide valuable insights into your hardware setup.

This guide offers a practical overview of what the nvidia-smi and lspci | grep -i nvidia commands display, how to interpret their results, and why they are necessary for modern GPU server environments like GPU4HOST.

What is nvidia-smi?

The nvidia-smi (which stands for NVIDIA System Management Interface) is basically a command-line utility, added with the NVIDIA GPU drivers, that gives thorough details about your installed AI GPU hardware. It’s an easy-to-use tool for checking:

- GPU usage

- Driver version

- Temperature

- Power utilization

- Memory usage

- Active processes

The nvidia-smi lspci output is mainly useful at the time of handling GPU clusters and tracking hardware performance across different GPU servers.

What is lspci | grep -i nvidia?

The command lspci|grep -i nvidia records PCI devices and filters for all those associated with NVIDIA. It’s mainly utilized to:

- Make sure that your system finds the NVIDIA hardware.

- Check the right model and PCI address.

- Guarantee compatibility for some tools like container orchestration and nvidia-smi platforms.

This command is a basic part of checking your GPU hardware setup before quickly deploying GPU hosting solutions.

Interpreting nvidia-smi lspci Output: A Practical Instance

Let’s completely break down the typical results you’ll encounter from every single command in a real-world GPU dedicated server setting.

Example Output from lspci | grep -i nvidia

18:00.0 3D controller: NVIDIA Corporation A100-PCIE-40GB (rev a1)

From this output, you confirm:

- The NVIDIA A100 is found.

- The PCI slot (18:00.0) matches the Bus-ID in nvidia-smi.

- The type of device is a: 3D controller.

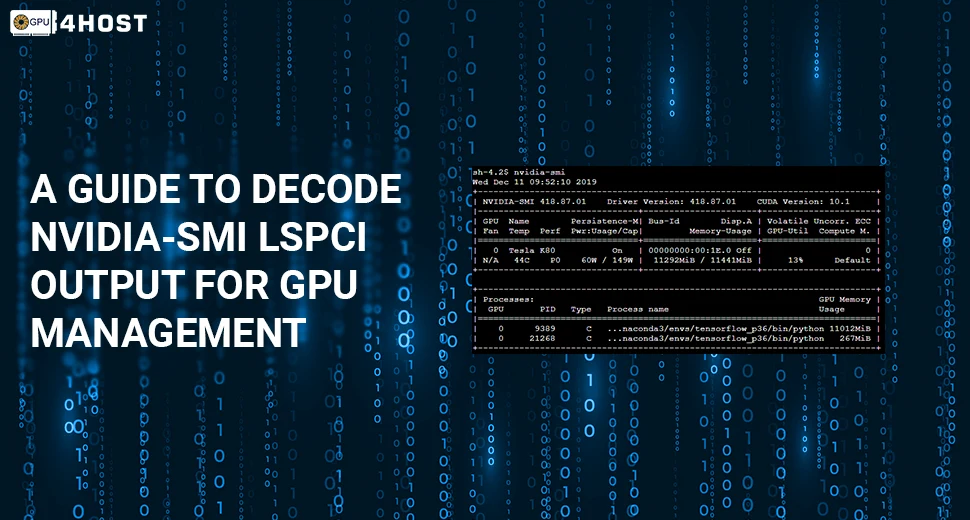

Example Output from nvidia-smi

+—————————————————————————–+

| NVIDIA-SMI 535.104.05 Driver Version: 535.104.05 CUDA Version: 12.2 |

|——————————-+———————-+———————-+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 A100-PCIE-40GB Off | 00000000:18:00.0 Off | 0 |

| N/A 42C P0 70W / 250W | 0MiB / 40536MiB | 0% Default |

+——————————-+———————-+———————-+

Here you can mark some points from this:

- GPU Name: Helpful for making sure that you have an NVIDIA A100.

- Bus-ID: Will work with the output of lspci.

- Power and Temp: Keep an eye on your AI GPU health.

- Memory Usage: Essential for handling AI image generator workloads or training deep learning models.

All these noteworthy details help with container deployments, PCI passthrough, and GPU server scaling.

Why nvidia-smi lspci Output Is Vital for GPU4HOST Clients?

At GPU4HOST, where both transparency and performance are a must, knowing about the nvidia-smi lspci output helps all our clients:

- Confirm the presence and specifications of their allocated GPU servers.

- Check thermal and power metrics at the time of complex tasks.

- Validate resource allocation for AI image generator or AI GPU tasks.

- Resolve driver issues or messy setups.

Even if you are renting a single GPU dedicated server or handling a GPU cluster, this output makes sure that everything is running as per predictions.

Real-World Use Cases

Monitoring with Watch Command

To constantly keep an eye on your NVIDIA A100 Red Hat OpenShift setup:

watch -n 1 nvidia-smi

Helpful for finding spikes in GPU usage or correcting performance-related issues.

Integration with Kubernetes

In a Red Hat OpenShift or Kubernetes setting, utilize:

kubectl describe node <node-name>

To simply check node-level GPU resource status—confirm it with your nvidia-smi lspci output.

PCI Passthrough for VMs

You will utilize lspci IDs to easily pass AI GPU resources into VMs or containers:

vfio-pci bind 0000:18:00.0

Resolving with nvidia-smi lspci Output

If the nvidia-smi command shows no devices:

- Make sure that all the latest drivers are installed properly.

- Utilize lspci | grep -i nvidia to check hardware detection.

If lspci finds the card but nvidia-smi doesn’t:

- The driver may undiscovered or outdated.

- The kernel module may have sometimes failed to load.

This dual-output diagnosis is necessary for high uptime and performance in GPU-based settings.

Best Practices for GPU Server Management

- Run Daily Checks: Schedule daily reviews of your nvidia-smi lspci output to make sure that all GPU servers are working correctly.

- Automate Monitoring: Add nvidia-smi into your checking stack with some tools like Grafana, shell scripts, or Prometheus.

- Document Setups: Store consistent output snapshots to develop a deadline for performance specs.

- Match Tasks to GPUs: Utilize nvidia-smi to check if tasks like AI image generation or training NLP models need a high-memory GPU such as the NVIDIA A100.

- Manage Clusters Productively: Utilize PCI IDs to handle and adjust GPU clusters by managing workloads properly.

- Avoid Overheating: Act on initial signs of excess heating or high power draw displayed in the nvidia-smi lspci output.

- Optimize Virtualization: Use lspci outputs for binding particular GPUs to containers or virtual machines safely.

- Stay Driver-Aware: Always make sure that your GPU driver matches the present CUDA version and is shown properly in nvidia-smi lspci output.

At GPU4HOST, we add all these practices to each GPU server setup, making sure that our clients have a trustworthy, flexible, and insight-powered GPU hosting experience.

Conclusion

Understanding and productively utilizing the nvidia-smi lspci output is basic for all those who are deploying GPU servers, from new businesses to enterprise-level GPU clusters. With some valuable tools like these, working with robust GPUs such as the NVIDIA A100 and trustworthy hosting service providers such as GPU4HOST, you get full visibility and access over your GPU infrastructure.

Master all these outputs now and handle your GPU dedicated server environment with complete confidence. All the above-mentioned commands not only offer transparency but also support high performance in complex AI GPU tasks and GPU hosting processes.

Even if you are running an AI image generator, training machine learning models, or deploying GPU-based containers on NVIDIA A100 Red Hat OpenShift, a thorough understanding of the nvidia-smi lspci output will fully set you up for growth.