Why GPU Processors Are Built in Hundreds: The Complete Guide

When you hear about the term “GPU processors,” you might think about a single robust chip. But here is the main twist—modern GPUs are not only one processor; they are fully packed with tons, even thousands, of small processors working with each other. If you have ever thought about why this is the scenario, you are not alone. In this comprehensive knowledge base, we’ll simply break down all the reasons behind this design, its true advantages, and how this architecture powers everything ranging from modern gaming to artificial intelligence.

The Main Difference Between CPUs and GPU Processors

To understand why GPU processors are typically limited to the hundreds, it is essential to recognize how they differ from CPUs.

- CPU: Few cores (generally 4–32), engineered especially for general-level computing and sequential workloads.

- GPU: Hundreds or thousands of small cores (processors) developed for performing parallel computing—running a lot of tasks simultaneously.

Just think of a CPU as the main chef who loves to cook a complicated dish, ideally. Here, a GPU is more like a huge kitchen staff; everyone is making a simple and basic dish at the same time, quick and productive when there’s a huge task.

Why Hundreds of GPU Processors?

Here is the complete practical answer: GPUs are engineered for carrying out parallel processing. Most of the tasks—mainly in the case of high-quality graphics rendering and AI—need a similar type of process to be repeated many times.

Core reasons

- Parallelism: Hundreds of available cores allow smooth calculations for pixels, data points, or others to happen at the same time.

- Speed for Repetitive Tasks: Best for complex calculations, high-quality image processing, and advanced AI model training.

- Energy Productivity in Different Types of Tasks: More processors running at lower frequencies take much less energy as compared to one massive processor running at high speed.

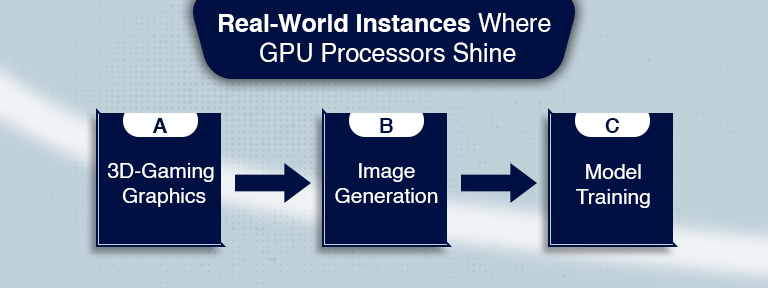

Real-World Instances Where GPU Processors Shine

a) Modern Gaming and 3D Graphics

- Every single GPU processor manages a huge portion of the screen’s pixels.

- Parallel rendering creates seamless graphics without interruption.

b) AI Image Generators

- Tools such as DALL·E or MidJourney demand thousands of matrix processes per second.

- A GPU’s parallel processors easily make AI image generation blazing fast.

c) AI Model Training

- A GPU server with tons of cores easily boosts machine learning by simply allocating training data across every single processor.

How GPU Servers Utilize Hundreds of Processors

When you buy or utilize a GPU server, you are not only getting one huge chip—you are getting hundreds of GPU processors that can easily run thousands of complex tasks in parallel.

Benefits for businesses:

- Improved performance for all available AI image generator tools without interruption.

- Quicker AI model training in industries like finance, healthcare, and many more.

- Real-time rendering for VFX and 3D modeling.

Service providers like GPU4HOST provide a GPU dedicated server and GPU hosting plans where these processors are enhanced for your particular task.

GPU Processors in AI GPU and GPU Clusters

If you are scaling AI tasks, a single GPU may not be sufficient.

This is the case where GPU clusters stand out—many GPUs connected with each other, every single one with hundreds of GPU processors, crafting a supercomputer-like setup.

For instance:

- NVIDIA V100 GPUs have more than 6,900 CUDA cores (GPU processors)—a centre for machine learning and challenging computing.

- In the case of a GPU cluster, more than one A100 card can work with each other for even improved performance.

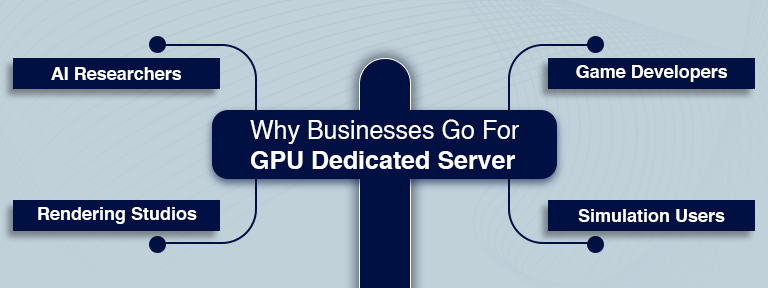

Why Businesses Go For GPU Dedicated Server

A GPU dedicated server states that your task gets the complete potential of all those GPU processors without sharing any single resource. This is necessary for:

- AI-based research teams

- Modern game developers

- 3D graphics rendering studios

- AI GPU users running high-level simulations

With the help of GPU hosting, you can easily scale up or down as per project demands, without physically purchasing the hardware.

How GPU Processors Impact Cloud GPU Hosting

In terms of GPU hosting, mainly with service providers like GPU4HOST, the hundreds of processors are completely used for:

- On-demand AI model processing

- High-performance computing (HPC)

- AI image generator tasks

- Big data analysis

Because the price of running all these processors in the case of cloud is shared, organizations can easily access advanced hardware such as Nvidia A100 without an upfront investment.

Practical Advantages of Having Hundreds of GPU Processors

- Speed: Quickly process tons of data points at the same time.

- Scalability: Manage more challenging tasks by including more GPUs.

- Energy Productivity: Complete big tasks quickly, decreasing total power utilization.

- Versatility: Ranging from gaming graphics to AI training, they manage everything smoothly.

Quick FAQ

Q1: Can CPUs match the performance of hundreds of GPU processors?

Not for parallel tasks. CPUs outshine at single-threaded, challenging logic; GPUs rule in repetitive, high-volume workloads.

Q2: Do all GPU servers have the same number of GPU processors?

No. It really depends on the type of GPU you are using. An Nvidia A100 additionally has more processors compared to previous models.

Q3: Is GPU hosting better than owning a GPU?

For all short-term or high-scaling demands, a big yes. Service providers like GPU4HOST always let you easily access advanced GPU processors without huge upfront costs.

Final Thoughts

The main reason why GPUs have tons of processors is very simple: it’s parallel power. Even if you are running an AI image generator, training advanced deep learning models on a GPU server, or high-resolution rendering graphics, GPU processors easily turn time-consuming projects into instant wins.

With the help of the best providers like GPU4HOST, who provide a GPU dedicated server and multi GPU server, you can easily unlock the power of thousands of GPU processors without handling any type of physical hardware—opening the new opportunities to quicker innovation, improved performance, and scalable computing.